The digital artists fighting back against AI

- Text by Georgina Findlay

- Illustrations by '@NoAiArt via Twitter

Nicholas Kole, a 35-year-old Vancouver-based character designer and illustrator, lights up when he recalls his entry into art. “When I was a teenager and was watching the Lord of the Rings movies, a bit flipped in my brain and lit a spark in me,” he says. “I got really excited about character design and the minutiae of how this stuff was made.” Nicholas’s mother is an illustrator, and he was raised in a creative environment where drawing was encouraged.

Having worked full-time in the digital art industry for 13 years as a character designer in video games, comic illustrator, toy designer for Hasbro and Mattel and TV animator – most recently on Netflix’s Scrooge – Nicholas has witnessed the growing impact of AI in all corners of the industry. But while the technologies have been under development for decades, it’s only in the last couple of months that the concerns of artists like Nicholas have sparked a global debate about AI and its place in the arts.

Nicholas says that the current models of AI-generated art are based on the “unethical scraping of data from our collective portfolios” – a reference to LAION-5B, a publicly-available AI-training dataset containing images and captions scraped from the internet. Images of artwork are collected through web scraping generally without creators’ or copyright owners’ consent. These billions of images are then used to train the AI algorithm to create new artwork through a long chain of steps involving processing, classifying, altering and manipulating the original data. “To myself and a lot of us who are protesting, [this] amounts to theft of our intellectual property,” Nicholas explains.

The last decade has seen massive advances in areas of AI called machine learning and deep learning. In simple terms, these are very complex algorithms based on digesting and finding patterns in vast troves of data harvested from the internet. Using this data, AI then applies advanced statistical techniques to generate recommendations, predictions, text or new imagery.

But in the last couple of months, key developments in an area known as diffusion models have unlocked a whole new set of services and applications collectively called ‘generative AI’. GPT-3, the language-processing AI model behind the chatbot ChatGPT, was tweaked through diffusion modelling by American corporation OpenAI to produce the well-known image generation program DALL-E in early 2021, and its sequel DALL-E 2, was released recently in November 2022.

While a group of ‘critical AI artists’ and engineers with access to machine learning technology have been integrating it into their work in nuanced ways since the beginning, illustrators like Nicholas do not find their creative process aided by AI at all. “I don’t see anything that I do on a day-in day-out basis that’s helped by AI,” he says. “When I sit down to design, I’m getting into the nuances of the context, I’m talking to many different stakeholders on the team, I’m asking them, ‘What’s the target audience? Who are we envisioning this for in the end? What do the people in the pipeline – the 3D modellers, the specific story, and things like sound and animation – need in what I’m about to draw?’

“All of that is just completely skipped and not addressed by AI image generation at all,” he continues. “You have results that you cannot fine-tune and control. And the more you make it fine-tuneable, the more it may as well be drawing.”

Nicholas is part of a global community of outspoken digital creatives campaigning for official regulation and government policy regarding the ethical and legal issues of AI image generation. In the United States, a $270,000 fundraiser organised by the Concept Art Association, which aims to chase down policymakers in Washington DC to help put this debate on the table, has nearly hit its target.

Moreover, a mass protest on sharing platform ArtStation which germinated in mid-December saw users plaster repeated images of a stop sign captioned ‘NO TO AI GENERATED IMAGES’ over their portfolios after they realised AI-generated art was being featured alongside human artwork on the site’s Explore page.

“The protest image I saw took over for weeks,” says Nicholas of the ArtStation protest. “It was really heartening to see everyone in the community agree that a place where artists are supposed to gather and share their professional portfolios of work is not a place where AI-generated art should be. We’re seeing people across lines of socio-economic status, and different strata within their companies – like art directors and CEOs – take up the call as well as new artists trying to break in on the ground level. Everybody sees this as a great issue.”

My reaction to people saying "ai makes art accessible to everyone": pic.twitter.com/20Swnsvsss

— DTM, Braytech CEO (@destiny_thememe) December 15, 2022

But for other members of the digital art sector, taking up arms against the AI companies that develop image generators using copyrighted artwork from the internet is to fight a losing battle. “I really don’t approve of the fact that some companies have scraped data, but the problem is that this data was not protected, so you can’t really attack them,” says founder of digital art magazine IAMAG, 51-year-old Patrice Leymaire. Bots have been scraping images from the web for decades, he explains; the machine learning algorithms have already learned the process to recreate artworks digitally, and no longer require the original dataset they’ve been trained on. Trying to fight against what’s already been done, Patrice says, is a waste of time.

But he does say there are steps individual artists can take to protect their art from data scraping in the future. “In PhotoShop for example, for almost a year there has been something called ‘content authenticity’, and you can track your image and how it’s being used online. This is one way. There are other tools, but people are not really aware of them.”

Many independent artists find that just thinking about the AI and digital art debate saps their creativity. Ronan Mahon, a 31-year-old freelance artist originally from Dublin and now based in Bavaria, Germany, says his productivity has suffered as a result of heated discussions. As the sole earner for his family, he has taken the decision to put the bigger questions out of his mind. “Honestly one of my new year’s resolutions was to think about the whole AI thing less, as it affects my mood,” he says.

“It’s hard to be creative when you get bogged down. Probably most upsetting is that I’ve had young artists ask me on Discord what the point of the career path I’ve taken is, now that AI is starting to make large inroads. They’ve said they’re not sure why I would choose it, as it seems untenable now.” However, Mahon continues to share the voice of vocal art supporters like Nicholas Kole, and has also removed his ArtStation gallery with around 7,000 followers in protest.

In addressing the huge concerns surrounding AI’s place in art, the academic world is seeing an increased intermingling of technology and the arts through multidisciplinary collaboration. Drew Hemment, researcher at the University of Edinburgh and creative AI lead on the AI & arts interest group at the Alan Turing Institute, explains that historical bias within the datasets AI image generators use is possibly the biggest problem.

“The model of machine learning finds patterns in historic data,” he explains. “But that historic data represents our world today – warts and all. The data these models are trained on reflects the bias, the racism, the sexism, the economic disparities… all of the harmful biases in society are reflected in the data, and then the algorithms amplify that bias,” he adds.

This harmful bias manifests in the perpetuation of social stereotypes in AI-generated images, from search terms like ‘CEO’ producing men in suits in earlier versions of DALL-E, to traditionally feminine adjectives such as ‘gentle’ and ‘supportive’ being more likely to render images of women. Many examples of AI-generated art also betray stylistic tendencies towards Eurocentrism, as the dataset scraped from the web includes an over-representation of images of fine art from the dominant culture of the West.

Ai art situation in a nutshell pic.twitter.com/2ZIiuSsjx7

— Lora Zombie (@LoraZombie) January 20, 2023

To address concerns about historic bias and the unethical use of data, teams of researchers like Drew are trying to develop algorithms that are transparent, meaning the factors affecting the decisions made by the algorithms are visible to those who use them. Drew’s lab also develops creative AI tools that enable artists to train their own model, as opposed to having to use “black box” tools where only the algorithm’s input and output are open to inspection by users, while its internal workings remain inaccessible.

But despite the proliferation of AI-generated imagery, Drew, Nicholas and Patrice all agree that the human element of individual creativity is what makes a true work of art. The final output from AI image generation can only ever be a variation of styles, scenes and images it has analysed before. While designed to innovate, it produces aesthetic products that are inescapably homogeneous.

For Nicholas, art is defined by the tangible presence of a thinking, feeling, problem-solving human. “Every detail that I’m adding to a design is intended to communicate,” he says. “I hope that, more than the negative elements of this conversation, there is this understanding that the concern of artists comes from a deeper love for what we do.”

As the world experiences a technological revolution that is growing at an exponential rate, closer collaboration between research institutions, lawyers and artistic practitioners on issues surrounding AI in the arts is vital. Drew hopes that, in future, AI technologies which support human agency will increase the scope for human creativity – rather than stifle it.

“Basically, the right way to think of AI is as tools,” says Drew. “We might call them a medium. But they’re not a replacement for humans. There’s still a person feeding in the prompts – the machines don’t have a purpose, an itch to scratch, or a vision to bring to the world. In that sense we can see it as another tool: just like a paintbrush is a tool, a synthesiser is a tool, PhotoShop is a tool. It brings with it a whole set of new capabilities, which is a massive paradigm shift.”

One possibility for the use of AI alongside human creativity is a workflow described as a ‘sandwich model’, where a human creates a prompt and has prior input, then the algorithm generates an output, and the human artist takes that image and works upon it further. In 3D video game art, this could facilitate more technical processes like rigging, while in film animation it could be used to solve complex problems like VFX or FX.

“I think there is a threat to jobs, but this will open up new creative paths, and people will find ways to work with these tools within a creative practice,” Drew adds. “There is something fundamentally troubling about the idea that human creativity is being displaced. I think we have to fight for human creativity.”

Enjoyed this article? Like Huck on Facebook or follow us on Twitter and Instagram.

You might like

Remembering New York’s ’90s gay scene via its vibrant nightclub flyers

Getting In — After coming out in his 20s, David Kennerley became a fixture on the city’s queer scene, while pocketing invites that he picked up along the way. His latest book dives into his rich archive.

Written by: Miss Rosen

On Alexander Skarsgård’s trousers, The Rehearsal, and the importance of weirdos

Freaks and Finances — In the May edition of our monthly culture newsletter, columnist Emma Garland reflects on the Swedish actor’s Cannes look, Nathan Fielder’s wild ambition, and Jafaican.

Written by: Emma Garland

Capturing life in the shadows of Canada’s largest oil refinery

The Cloud Factory — Growing up on the fringes of Saint John, New Brunswick, the Irving Oil Refinery was ever present for photographer Chris Donovan. His new photobook explores its lingering impacts on the city’s landscape and people.

Written by: Miss Rosen

Susan Meiselas captured Nicaragua’s revolution in stark, powerful detail

Nicaragua: June 1978-1979 — With a new edition of her seminal photobook, the Magnum photographer reflects on her role in shaping the resistance’s visual language, and the state of US-Nicaraguan relations nearly five decades later.

Written by: Miss Rosen

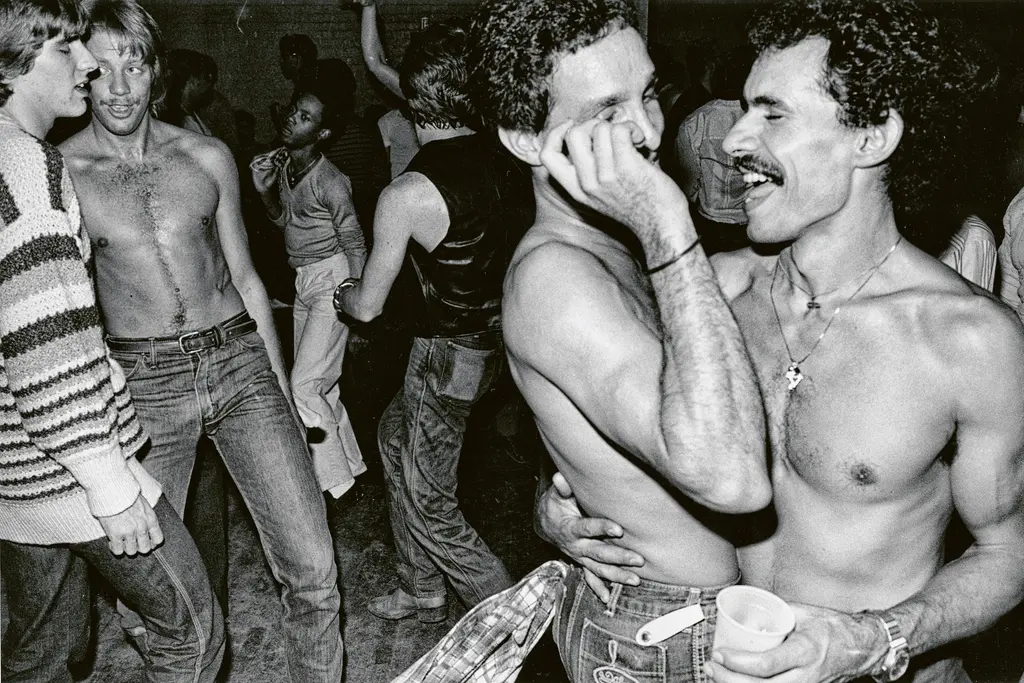

A visual trip through 100 years of New York’s LGBTQ+ spaces

Queer Happened Here — A new book from historian and writer Marc Zinaman maps scores of Manhattan’s queer venues and informal meeting places, documenting the city’s long LGBTQ+ history in the process.

Written by: Isaac Muk

Nostalgic photos of everyday life in ’70s San Francisco

A Fearless Eye — Having moved to the Bay Area in 1969, Barbara Ramos spent days wandering its streets, photographing its landscape and characters. In the process she captured a city in flux, as its burgeoning countercultural youth movement crossed with longtime residents.

Written by: Miss Rosen